The neural network is a Long Short-Term Memory (LSTM) neural network, which has transformed the way artificial intelligence can use sequential data and can now be used to make groundbreaking applications in language translation, speech recognition systems, and predictive analytics.

With the rising use of AI-powered products and services by businesses, it is becoming important to know about the LSTM technology among data scientists, machine learning engineers, and other technology leaders.

What Are LSTM Neuropolar Networks?

LSTM (Long Short-Term Memory) neural networks are specialized recurrent neural networks (RNNs), which are trained to overcome the major shortcomings of traditional neural network architectures. LSTMs were introduced by Hochreiter and Schmidhuber in 1997 to perform better in learning long-term dependencies of sequential data than feedback networks and elongated Hopfield networks.

LSTMs are thus appropriate in applications where knowledge gained in earlier inputs influences the present predictions quite substantially.

Compared to standard RNNs that have difficulties in information capacity on long sequences, LSTM networks have both short-term and long-term memory, which is an aspect facilitated by a novel gate-based structure. This structure allows them to learn selective memory, in which they would then remember necessary knowledge and forget irrelevant information, making predictions more accurate and training more consistent.

The Problem with Traditional RNNs

Before diving into LSTM architecture, it’s essential to understand why traditional RNNs fall short in handling long-term dependencies:

Vanishing Gradient Problem

During training, gradients become progressively smaller as they propagate backward through time steps. This phenomenon makes it nearly impossible for the network to learn relationships between distant elements in a sequence, severely limiting the model’s ability to capture long-term patterns.

Exploding Gradient Problem

Conversely, gradients can sometimes grow exponentially large, causing training instability and erratic model behavior. This makes consistent learning extremely difficult and often leads to training failures.

These fundamental issues prevent traditional RNNs from effectively processing sequences where early information remains relevant for later predictions—a common requirement in real-world applications.

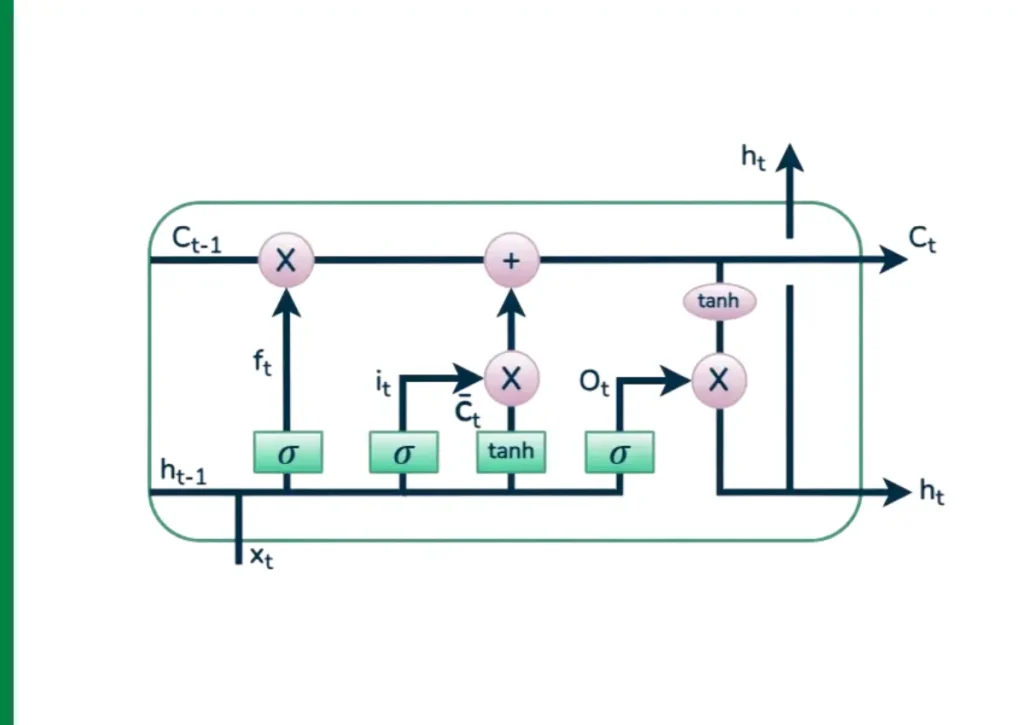

LSTM Architecture: The Three-Gate System

LSTM networks solve these problems through a sophisticated memory cell controlled by three specialized gates:

1. Forget Gate

The forget gate determines which information should be discarded from the cell state. It processes the current input and previous hidden state through a sigmoid activation function, producing values between 0 and 1. A value close to 0 means “forget this information,” while a value near 1 means “keep this information.”

Mathematical representation: ft = σ(Wf · [ht-1, xt] + bf)

2. Input Gate

The input gate controls what new information gets stored in the cell state. It works in two phases: first, a sigmoid layer decides which values to update, then a tanh layer creates candidate values that could be added to the state.

Mathematical representation: it = σ(Wi · [ht-1, xt] + bi) Ĉt = tanh(Wc · [ht-1, xt] + bc)

3. Output Gate

The output gate determines what parts of the cell state become the output. It filters the cell state through a tanh function and multiplies it by the output of a sigmoid gate, ensuring only relevant information passes through.

Mathematical representation: ot = σ(Wo · [ht-1, xt] + bo)

This three-gate system enables LSTMs to maintain precise control over information flow, effectively solving the vanishing gradient problem that plagues traditional RNNs.

Key Applications of LSTM Neural Networks

Natural Language Processing

LSTMs excel in language-related tasks including:

- Machine Translation: Converting text between languages while maintaining context and meaning

- Text Summarization: Generating concise summaries of lengthy documents

- Sentiment Analysis: Determining emotional tone in social media posts, reviews, and customer feedback

Speech and Audio Processing

- Speech Recognition: Converting spoken words to text with high accuracy

- Voice Command Recognition: Enabling voice-activated systems and virtual assistants

- Audio Classification: Identifying sounds, music genres, or speaker recognition

Time Series Forecasting

- Financial Modeling: Predicting stock prices, market trends, and economic indicators

- Weather Prediction: Analyzing atmospheric patterns for accurate forecasting

- Energy Consumption: Optimizing power grid management and renewable energy distribution

Business Intelligence

- Anomaly Detection: Identifying fraudulent transactions or network security breaches

- Recommendation Systems: Personalizing content suggestions based on user behavior patterns

- Supply Chain Optimization: Predicting demand fluctuations and inventory requirements

Industry Applications and Success Stories

Healthcare and Medical Research

During the COVID-19 pandemic, researchers successfully employed LSTM networks to model disease spread patterns, helping hospitals and governments prepare for case surges. These models analyzed multiple variables, including population density, mobility patterns, and intervention effectiveness.

Energy and Manufacturing

Oil drilling operations use LSTM networks to optimize penetration rates by analyzing complex variables like hydraulic pressure, geological conditions, and equipment specifications. This application has resulted in significant cost savings and improved operational efficiency.

Environmental Science

Water management organizations leverage LSTM technology for evaporation modeling and resource planning. These systems consider weather patterns, seasonal variations, and geographical factors to provide accurate water availability predictions.

Advantages of LSTM Networks

Superior Memory Management

LSTMs can retain relevant information across hundreds or thousands of time steps, making them ideal for applications requiring long-term context awareness.

Computational Efficiency

Unlike traditional RNNs that update every node for each input, LSTMs selectively update only necessary components, reducing computational overhead and training time.

High Prediction Accuracy

The ability to maintain both short and long-term memory enables LSTMs to make more informed predictions based on comprehensive historical context.

Gradient Stability

The gate-based architecture prevents both vanishing and exploding gradient problems, ensuring stable and consistent training performance.

Challenges and Limitations

Computational Requirements

LSTM networks demand significant computational resources and memory compared to simpler neural network architectures. This can be a limiting factor for resource-constrained environments.

Overfitting Susceptibility

The complexity of LSTM architecture can lead to overfitting, where the model performs well on training data but struggles with new, unseen inputs.

Implementation Complexity

Designing and tuning LSTM networks requires deep understanding of the architecture and careful hyperparameter optimization.

Future of LSTM Technology

As artificial intelligence continues evolving, LSTM networks remain foundational to many breakthrough applications. Recent developments in transformer architectures and attention mechanisms have complemented LSTM capabilities, creating hybrid models that leverage the strengths of both approaches.

The integration of LSTM networks with other deep learning technologies promises continued innovation in areas like autonomous vehicles, advanced robotics, and personalized medicine.

Final thoughts

LSTM neural networks will continue to be important in 2025 when it comes to processing sequential data in many areas such as language processing, speech recognition, and prediction. They are advantageous to traditional RNNs in the sense that their special memory structure is capable of capturing long-term patterns.

Although there are other newer models such as Transformers, LSTMs continue to excel in cases where efficiency and effective sequence memory is expected. Complications such as the high computer requirements and complexity still exist without being hit hard, and hybrid procedures are opening up potential.